Saidot Guide

An Introduction to EU AI Act

A Practical Guide to Governance,

Compliance, and Regulatory Guidelines

A Practical Guide to Governance,

Compliance, and Regulatory Guidelines

Are you responsible for ensuring your organisation's AI systems comply with the EU AI Act? This flagship legislative framework, with its innovative risk-based approach to regulation, is setting the tone for global AI governance efforts. This guide offers practical insights to help you navigate the new AI Act’s requirements, risk classifications, and best practices for implementation.

Equip yourself with strategies to establish robust AI governance frameworks, conduct risk assessments, implement human oversight, and foster transparency – all while minimising legal risks and penalties. To prepare your organisation for the AI regulation that will shape the responsible development and use of these powerful technologies across Europe and beyond, read on.

Additional resources:

The European Union (EU) AI Act is the world's most comprehensive regulation on artificial intelligence (AI). Critically, it doesn’t just apply to EU-based organisations. It applies to all organisations that bring AI systems to the EU market as well as those that put them into service within the EU.

After developing and negotiating the technicalities of the Act, EU lawmakers have created a person-centred legal framework designed to safeguard individuals’ fundamental rights and protect citizens from the most dangerous risks posed by AI systems.

The purpose of the EU AI Act is to "improve the functioning of the internal market by laying down a uniform legal framework in particular for the development, the placing on the market, the putting into service and the use of artificial intelligence systems (AI systems) in the Union, in accordance with Union values, to promote the uptake of human centric and trustworthy artificial intelligence (AI) while ensuring a high level of protection of health, safety, fundamental rights as enshrined in the Charter of Fundamental Rights of the European Union (the ‘Charter’), including democracy, the rule of law and environmental protection, to protect against the harmful effects of AI systems in the Union, and to support innovation".

The Act was created to safeguard users and citizens and foster an environment where AI can thrive responsibly. Furthermore, the Act aims to proactively minimise risks associated with certain AI applications before they occur. This approach underscores a commitment to harnessing AI's benefits while putting human welfare and ethical considerations first.

The Act categorises AI systems based on their associated risks, with rules based on four distinct risk categories:

These systems are banned entirely due to the high risk of violating fundamental rights (e.g. social scoring based on ethnicity).

These systems require strict compliance measures because they could negatively impact rights or safety if not managed carefully (e.g. facial recognition).

These systems pose lower risks but still require some level of transparency (e.g. chatbots).

The Act allows the free use of minimal-risk AI, like spam filters or AI-powered games.

The AI Act introduces specific transparency requirements for certain AI technologies, including deepfakes and emotion recognition systems. However, it exempts activities related to research, innovation, national security, military, or defence purposes.

Interestingly, creators of free and open-source AI models largely escape the obligations normally imposed on AI system providers, with an exception made for those offering general-purpose AI (GPAI) models that pose significant systemic risks.

A notable aspect of the EU AI Act is its enforcement mechanism, which allows local market surveillance authorities to impose fines for non-compliance. This regulatory framework aims not only to govern the development and deployment of AI systems that will be put into service in the EU, but also to ensure these technologies are developed and used in a manner that is transparent, accountable, and respectful of fundamental rights.

Both organisations bringing AI systems to the EU market and those putting them into service in the EU will have new obligations and requirements under the Act.

The EU AI Act specifically outlines specific requirements for:

Non-technical stakeholders may be confused by the difference between the terms ‘neural networks’, ‘machine learning’, ‘deep learning’, and ‘artificial intelligence’—given that each one is a related but separate term. Therefore, it’s important to understand the basis on which the EU AI Act defines the term ‘AI’.

The EU AI Act adopts the OECD's definition of artificial intelligence as “a machine-based system created to operate with different degrees of autonomy and that learns and adjusts its behaviour over time. Its purpose is to produce outputs, whether explicitly or implicitly intended, such as predictions, content, recommendations, or decisions that can have an impact on physical or virtual environments.”

Notably, the Act acknowledges the varying degrees of autonomy and adaptiveness that AI systems may show following their deployment.

According to Article 3 of the EU AI Act, general-purpose AI model is an AI model, including where such an AI model is trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications, except AI models that are used for research, development or prototyping activities before they are placed on the market.

The EU’s definition of general-purpose AI (GPAI) models is based on the key functional characteristics of a GPAI model, in particular, its generality and capability to competently perform a wide range of distinct tasks.

GPAI models are defined based on criteria that include the following:

Whether an AI model is general-purpose or not, among other criteria, can also be determined by its number of parameters. The Act indicates that models with at least a billion parameters, trained with a large amount of data using self-supervision at scale, should be considered as displaying significant generality.

A general-purpose AI system is created when a general-purpose AI model is integrated into or forms part of an AI system. Due to this integration, the system can serve a variety of purposes. A general-purpose AI system can be used directly or integrated into other AI systems.

For more information, refer to Recital 97 of the EU AI Act.

The EU AI Act is the world's first comprehensive legal framework on AI. It came into force in 2024, with additional obligations and penalties applying 6 to 36 months after this date.

Having built the EU AI Act on existing international standard-setting around AI ethics, the EU aims to facilitate a global convergence on best practices and well-defined risk categories. This regulation is intended as an example for other countries as they begin to align their regulatory approaches.

01

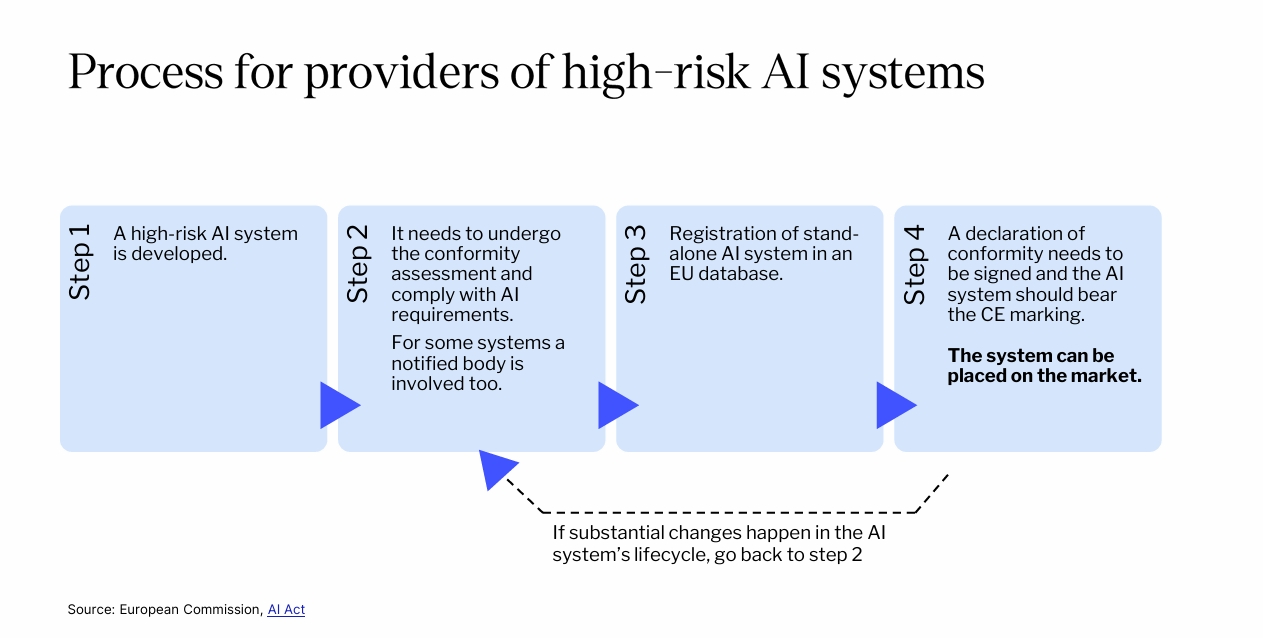

Providers of high-risk AI systems

The EU AI Act requires providers of high-risk AI systems to establish a comprehensive risk management system, to implement robust data governance measures, technical documentation, meticulous record-keeping practices, and transparent provision of information to deployers. Furthermore, the Act includes an obligation for human oversight, accuracy, robustness, and cybersecurity. The implementation of a quality management system and ongoing post-market monitoring are also important obligations outlined in the regulation.

Which AI systems are high-risk?

Providers must undergo a rigorous conformity assessment process, obtain an EU declaration of conformity, and affix the CE marking to their systems. Registration in an EU or national database is compulsory, requiring the provision of identification and contact information, as well as a demonstration of conformity and adherence to accessibility standards. Diligent document retention and the generation of automated logs are also mandated.

The Act imposes a duty to:

Collectively, these measures aim to foster accountability, transparency, and the responsible development of high-risk AI systems within the EU.

02

Deployers of high-risk AI systems

Under the EU AI Act, deployers of high-risk AI systems also face clear obligations. They must implement and demonstrate measures to comply with the system provider’s instructions for use and assign human oversight to relevant individuals, ensuring their competence for the role.

Which AI systems are high-risk?

Verifying the relevance and representativeness of input data is crucial, as is monitoring the system's operation and informing providers of any issues. Transparency and disclosure regarding AI decision-making processes are also required.

In addition to mandatory record-keeping, deployers must provide notifications for workplace deployments, and public authorities must register deployments in the EU Database. All organisations deploying high-risk systems must cooperate with authorities, including participation in investigations and audits. Conducting data protection impact assessments is a statutory requirement, and deployers must obtain judicial authorisation for exempted use of post-remote biometric identification.

There are additional obligations for deployers who are:

These groups must also conduct fundamental rights impact assessments and notify the national authority of their results, ensuring the protection of fundamental rights and adherence to ethical principles.

Saidot is a European SaaS platform that enables you to automatically identify relevant risks, get mitigation suggestions, and ensure compliance with ready-to-use policy templates.

Learn more about Saidot03

Providers and deployers of specific systems with transparency risk

The EU AI Act also outlines several transparency obligations for providers and deployers of certain AI systems:

AI Interaction Transparency: Providers and deployers must ensure transparency when humans interact with an AI system. This includes making users aware that they are interacting with an AI rather than a human and providing clear information about the AI system's characteristics and capabilities.

Synthetic Content Disclosure and Marking Requirement: GPAI providers must disclose and mark any synthetic content, such as text, audio, images, or videos, generated by their systems. This marking must be clear and easily recognisable for the average person.

Emotion Recognition and Biometric Categorisation AI System Operation and Data Processing Transparency: For AI systems that perform emotion recognition or biometric categorisation, providers and deployers must ensure transparency about the system's operation and data processing activities. This includes providing information on the data used, the reasons for deploying the system, and the logic involved in the AI decision-making process.

Disclosure of AI-Generated Deepake Content: Providers and deployers must disclose when content – including image, audio, and video formats – has been artificially created or manipulated by an AI system, commonly known as "deepfakes." This disclosure must be clear and easily understandable to the average person.

AI-Generated Public Interest Text Disclosure Obligation: For AI systems that generate text on matters of public interest, such as news articles or social media posts, providers and deployers must disclose that the content was generated by an AI system. This obligation aims to promote transparency and prevent the spread of misinformation.

04

Providers of general-purpose AI (GPAI)

The EU AI Act imposes specific requirements on providers of general-purpose AI (GPAI). These include disclosing and marking synthetic content generated by their systems; maintaining technical documentation detailing the training, testing, and evaluation processes and results; and providing information and documentation to providers integrating the GPAI model into their AI systems.

How does the EU define general-purpose AI (GPAI) models and GPAI systems?

Compliance with copyright law is also required, as providers must ensure that their systems do not infringe upon intellectual property rights. Additionally, the Act introduces an obligation for organisations to disclose summaries which detail the training content used for the AI system.

Cooperation with authorities is another fundamental obligation, requiring providers to participate in investigations, audits, and other regulatory activities as necessary.

05

Providers of general-purpose AI (GPAI) models with systemic risk

In addition to the fundamental obligations that the EU AI Act imposes on providers of GPAI models, it also places requirements on providers of GPAI with systemic risk.

What is a GPAI system with systemic risk?

Since systemic risks result from particularly high capabilities, a GPAI system is considered to present systemic risks if it has high-impact capabilities – evaluated using appropriate technical tools and methodologies – or has a significant impact on the EU market due to its reach.

For more information, refer to Article 51 of the EU AI Act.

Requirements

These providers must conduct standardised model evaluations and adversarial testing to assess the system's robustness and identify potential vulnerabilities or risks.

Comprehensive risk assessment and mitigation measures are mandatory, including strategies to address identified risks and minimise potential harm. Providers must also establish robust incident and corrective measure tracking, documentation, and reporting processes – ensuring transparency and accountability in the event of system failures or adverse incidents.

Cybersecurity protection is a critical requirement, which means providers must implement robust safeguards to mitigate cyber threats and protect the integrity, confidentiality, and availability of their GPAI systems and associated data.

To help you get started, we've put together a free template for crafting your GenAI policy, including questions on each of the 12 recommended themes.

Download free templateOn 13 March 2024, the European Parliament voted overwhelmingly to approve the new AI Act, with 523 votes in favour, 46 against, and 49 abstentions. The implementation of the AI Act will now transition through the following stages:

In the long term, the European Union will work with the G7, the OECD, the Council of Europe, the G20, and the United Nations to achieve global alignment on AI governance.

Latest update: On 12 July, 2024, the EU AI Act was published in the Official Journal of the European Union, meaning the Act will go into force on 1 August, 2024.

The EU AI Act aims to ensure the safe, ethical, and trustworthy development and use of artificial intelligence technologies. Therefore, the Act contains strong enforcement mechanisms if companies or organisations fail to comply with its obligations.

The Act empowers market surveillance authorities in EU countries to issue fines for non-compliance. There are three tiers of fines: based on the severity of the infraction, ranging from providing incorrect information to outright violations of the Act's prohibitions.

The penalties for non-compliance are:

The EU AI Act enforces compliance through fines, but these penalties will be phased in over time. Here's a simple breakdown:

Most penalties:

Exceptions:

Summary:

Here is a breakdown of some prohibited use cases under the EU AI Act:

The EU AI Act classifies certain AI systems as high-risk due to their potential impact on people's lives, rights, and safety. These high-risk systems require stricter compliance measures to ensure their responsible development and use.

Here's a breakdown of some key high-risk categories:

It's important to note that within these categories, exemptions exist for narrow, low-risk applications of AI systems. These exemptions generally apply to situations where the AI has minimal influence on a decision's outcome.

For more details on high-risk categories, refer to Annex III of the EU AI Act.

Here's a breakdown of the key factors to consider when assessing your AI system's risk level:

1. Potential Impact on People and Society:

2. Intended Purpose and Sector:

By considering these factors, you can start to examine how your AI system might fall into one of the following risk levels:

Remember: Transparency is crucial across all risk levels. Even for lower-risk systems, users should be aware they are interacting with AI.

Find out your risk level with the help of our AI Act Classifier.

These systems are banned entirely due to the high risk of violating fundamental rights (e.g. social scoring based on ethnicity).

These systems require strict compliance measures because they could negatively impact rights or safety if not managed carefully (e.g. facial recognition).

These systems pose lower risks but still require some level of transparency (e.g. chatbots).

The Act allows the free use of minimal-risk AI, like spam filters or AI-powered games.

Complying with the EU AI Act brings several advantages for companies and organisations. It can:

The EU AI Act establishes specific guidelines for the training data and datasets used in AI systems, particularly those classified as high-risk. Here's a breakdown of the key requirements:

High-Quality Data: Training, validation, and testing datasets must possess three key qualities:

Appropriate Statistical Properties: Datasets used in high-risk AI systems must have appropriate statistical properties, including, where applicable, those that apply to the persons or groups of persons in relation to whom the high-risk AI system is intended to be used. These characteristics should be visible in each individual dataset or in a combination of different datasets.

Data Transparency: Organisations developing high-risk AI systems should be able to demonstrate that their training data meets the aforementioned quality and fairness standards. This may involve documentation or traceability measures.

Contextual Relevance: To the extent required by their intended purpose, high-risk AI systems' training datasets should reflect the specific environment where the AI will be deployed. This means considering factors like geographical location, cultural context, user behaviour, and the system's intended function. For example, an AI used for legal text analysis in Europe should be trained on data that includes European legal documents.

While your obligations may vary depending on whether you are a deployer or provider of a high-risk AI system, or a provider of a GPAI system, there is a general framework you can follow to help you ensure compliance at every stage of the product lifecycle.

Here are a series of steps you can use to prepare to meet your obligations under the EU AI Act:

If you are a provider and you have determined that your AI system is not high-risk, you should document this assessment before you put it on the market or into service. You should also provide this documentation to national competent authorities upon request and register the system in the EU database.

In addition, market surveillance authorities can carry out evaluations on your AI system to determine whether it is actually high-risk. Providers who classify their AI system as limited or minimal risk when it is actually high-risk will be subject to penalties.

You can determine whether an AI system qualifies for an exemption from high-risk categorisation based on one or more of the following criteria:

For more information, refer to Annex III of the EU AI Act.

What is the EU database?

The European Commission, in collaboration with the Member States, will set up and maintain an EU database containing information about high-risk AI systems.

How do you register?

For specific high-risk systems related to law enforcement and border control, registration occurs in a secure, non-public section of the EU database, accessible only to the Commission and national authorities. The information you submit should be easily navigable and machine-readable.

For more information, refer to Articles 49 of the EU AI Act.

What information should you register, and who’s responsible?

The provider or their authorised representative must enter the following information into the EU database for each AI system:

For more information, refer to Annex VIII, Section A of the EU AI Act.

For AI systems deployed by public authorities, agencies, or bodies, the deployer or their representative must enter:

For more information, refer to Annex VIII, Section B of the EU AI Act.

Who sees this information?

The information in the EU database will be publicly accessible and presented in a user-friendly, machine-readable format to allow easy navigation by the general public.

However, there is an exception for information related to the testing of high-risk AI systems in real-world conditions outside of AI regulatory sandboxes. This information will only be accessible to market surveillance authorities and the European Commission, unless the prospective provider or deployer also provides explicit consent for it to be made publicly available.

For more information about this exception, refer to Article 60 of the EU AI Act.

For all other entries in the database, the details provided by providers, authorised representatives, and deploying public bodies will be openly accessible to the public. Providers should ensure the submitted information is clear and easily understandable.

Using our handbook, you'll navigate AI with confidence to build trust, manage risks, ensure compliance, and unlock AI's full potential in a responsible way.

AI governance should be a systematic, iterative process that organisations can continuously improve upon. To achieve this, companies need to establish a robust, flexible framework designed to meet their compliance needs and responsible AI goals. This framework should include:

As regulations and technologies evolve, organisations must proactively update their governance frameworks to maintain compliance and alignment with best practices.

The European AI Board (EAIB) is an independent advisory body that provides technical expertise and advice on implementing the EU AI Act. It comprises high-level representatives from national supervisory authorities, the European Data Protection Supervisor, and the European Commission.

The AI Board’s role is to facilitate a smooth, effective, and harmonised implementation of the EU AI Act. Additionally, the EAIB will review and advise on commitments made under the EU AI Pact.

Comprehensive AI risk assessments are a critical component of responsible AI governance. They allow stakeholders to proactively identify potential risks posed by their AI systems across domains such as health and safety, fundamental rights, and environmental impact. While it is important to realise that there is no single method or ‘silver bullet’ for conducting a risk assessment, the National Institute for Standards and Technology (NIST)’s AI risk management framework is a good example for identification, analysis, and mitigation.

Another good starting point could be to use an organisation’s pre-existing processes to assess general risk and then assign scores to each potential risk posed by an AI system, depending on the impact and likelihood of each risk.

Typically, the risk assessment process involves first identifying and ranking AI risks as unacceptable (prohibited), high, limited, or minimal based on applicable laws and industry guidelines. For example, AI systems used for social scoring, exploiting vulnerabilities, or biometric categorisation based on protected characteristics would likely be considered unacceptable under the proposed EU AI Act.

Once unacceptable risks are eliminated, the organisation should then analyse whether remaining use cases are high-risk, such as in critical infrastructure, employment, essential services, law enforcement, or processing of sensitive personal data. Lower risk tiers may include AI assistants like chatbots that require user disclosure.

The next step is to evaluate the likelihood and potential severity of each identified risk. One helpful tool is a risk matrix, which helps organisations characterise probability and impact as critical, moderate, or low to guide further action. A financial institution deploying AI credit scoring models, for instance, should assess risks like perpetuating demographic biases or unfair lending practices.

Thorough documentation of risks, mitigation strategies, and human oversight measures is essential for demonstrating accountability. The EU AI Act will also require fundamental rights impact assessments to analyse affected groups and human rights implications for high-risk AI use cases.

Importantly, risk assessments aren't a one-time exercise. They require continuous monitoring and updates as AI capabilities evolve and new risks emerge over the system's lifecycle. Proactive, holistic assessments enable the deployment of AI systems that operate within defined, acceptable risk tolerances.

Establishing robust accountability and leadership structures is crucial for effective AI governance. Clear roles and responsibilities should be designated for key aspects like data management, risk assessments, compliance monitoring, and ethical oversight. These structures must transparently show decision-making authorities and reporting lines, enabling timely interventions when needed. They should also be regularly reviewed and updated to align with evolving regulations, best practices, and the organisation's evolving AI capabilities.

To establish these accountability structures, organisations should thoroughly assess their AI initiatives, identify associated risks and stakeholders, and consult relevant subject matter experts. Those appointed to AI governance roles must receive proper training and resources, fostering a culture of shared responsibility and continuous improvement around responsible AI practices.

The guidance aligns with recommendations from the Center for Information Policy Leadership (CIPL), which strongly advises organisations to create robust leadership and oversight structures as the foundation for accountable AI governance. This begins with the senior executive team and board visibly demonstrating ethical commitment through formal AI principles, codes of conduct, and clear communications company-wide.

This is just the start. The CIPL also recommends appointing dedicated AI ethics oversight bodies, whether internal cross-functional committees or external advisory councils with diverse expertise. The key is ensuring these bodies have real authority to scrutinise high-risk AI use cases, resolve challenges, and ultimately shape governance policies and practices.

These leadership structures could include designated "responsible AI" leads or officers, who have the ability to holistically drive the governance program across business units. Meanwhile, with a centralised governance framework, organisations are furnished with strategic direction while allowing flexibility for teams to adapt divergent practices in context.

However, this recommendation will not be right for everyone, so it’s key to examine your organisation’s individual needs and capabilities. Another way to enhance your AI compliance efforts is to take advantage of and expand any existing privacy teams and roles to include AI ethics, data responsibility, and digital trust. Ultimately, successful frameworks will require multidisciplinary teams that combine legal, ethical, technical, and business perspectives.

Organisations should establish a comprehensive system for reporting AI-related incidents, including data breaches, biases, or unintended consequences. This system should also include mechanisms for prompt identification, documentation, and escalation of incidents, enabling timely and transparent responses. When incidents occur, acting promptly and transparently is essential, as is taking the necessary steps to address and resolve the issues, mitigate potential impacts, and prevent future occurrences.

For example, if we imagine an organisation operating AI-powered autonomous vehicles, its monitoring and incident reporting mechanisms might include deploying real-time monitoring systems to scrutinise the decisions made in navigation, instituting agile incident reporting mechanisms such as anomaly detection alerts, and enacting protocols for immediate intervention in response to safety concerns or system malfunctions, ensuring operational integrity and passenger safety.

Organisations should develop clear procedures to address issues or errors identified through human oversight mechanisms, ensuring appropriate interventions and course corrections can be made in a timely manner. It is essential to ensure that there is always a 'human in the loop' when it comes to decision-making and actions taken by AI systems. This means human judgment has the final say in high-impact situations.

For example, a hypothetical healthcare provider using AI-assisted diagnostic tools may have human medical professionals review and validate the AI's recommendations, ensuring that any potential errors or biases are identified and corrected before treatment decisions are made.

In order to protect fundamental rights, organisations deploying high-risk AI systems must carry out a fundamental rights impact assessment before use. This applies to public bodies, private operators providing public services, and certain high-risk sectors like banking or insurance.

The aim is to identify specific risks to individuals or groups likely to be affected by the AI system. Deployers must outline measures to mitigate these risks if they materialise.

The impact assessment should cover the intended purpose, time period, frequency of use, and categories of people potentially impacted. It must identify potential harms to fundamental rights.

Deployers should use relevant information from the AI provider's instructions to properly assess impact. Based on identified risks, they must determine governance measures, such as human oversight and complaint procedures.

After the assessment, the deployer must notify the relevant market surveillance authority. Stakeholder input from affected groups, experts and civil society may be valuable. The AI Office will develop a questionnaire template to facilitate compliance and reduce the administrative burden for deployers.

Sarah Bird, CPO, Responsible AI at Microsoft

Read full blogFor many organisations, AI governance and compliance can be an overwhelming process. If you need to keep up with the ever-evolving landscape of AI regulations, including the EU AI Act, Saidot Library can provide you with the information you need. As a curated, continuously updated knowledge base on AI governance. It includes:

If you require more holistic advice and support with your AI governance journey, together, our experts can help you develop and implement AI governance frameworks tailored to your organisation’s specific needs and industry. We offer governance-as-a-service so you can get started with high-quality governance and learn from our experts to set up your first AI governance cases.

Apply systematic governance at every step of your system lifecycle. Understand regulative requirements and implement your system in alignment with them. Identify and mitigate risks, and monitor performance of your AI systems.

Access a wide range of AI regulations and evaluate whether your intended use cases are allowed under the EU AI Act, for example. Operationalise legal requirements via templates and collaborate with AI teams to ensure effortless compliance.

Recent guidelines for AI Act implementation: